Neural networks were designed to mimic the way the human brain learns and processes information. At their core, they consist of layers of neurons, where each neuron is a mathematical function that processes numerical inputs and transmits an output to the next layer. The process continues until the final layer produces a prediction.

Neural networks power some of the most impressive AI applications today, including speech recognition, image classification, natural language processing (NLP), medical diagnostics, and recommendation systems. But how do they actually work? Let’s break it down.

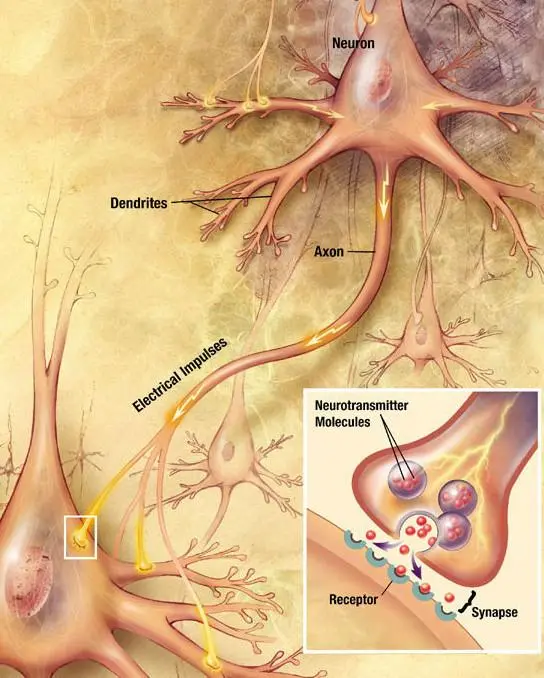

How the biological neurons work

Neural networks take inspiration from biological neurons, which are fundamental building blocks of the human brain. Here’s a simplified view of how neurons work:

Receiving Inputs – Neurons receive electrical signals from other neurons through their dendrites.

Processing Information – The nucleus of the neuron processes these signals, performing computations.

Sending Outputs – The neuron then transmits an electrical impulse through its axon to other neurons.

Repeating the Process – This output becomes the input for the next neuron, forming a network of connections that enable learning and decision-making.

Artificial Neural Network

Artificial neural networks (ANNs) are simplified mathematical models inspired by biological neurons. However, they do not replicate the brain’s functionality exactly—instead, they use computational techniques to approximate learning.

How an Artificial Neuron Works

A neuron in an ANN follows a simple process:

It receives one or more numerical inputs.

It applies a mathematical function to process the inputs.

It generates an output that is passed to the next neuron.

The key difference from biological neurons is that artificial neurons perform mathematical operations on data instead of transmitting electrical impulses.

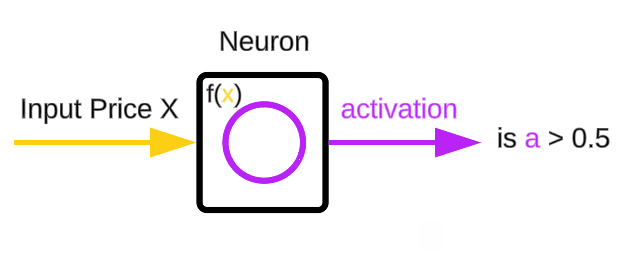

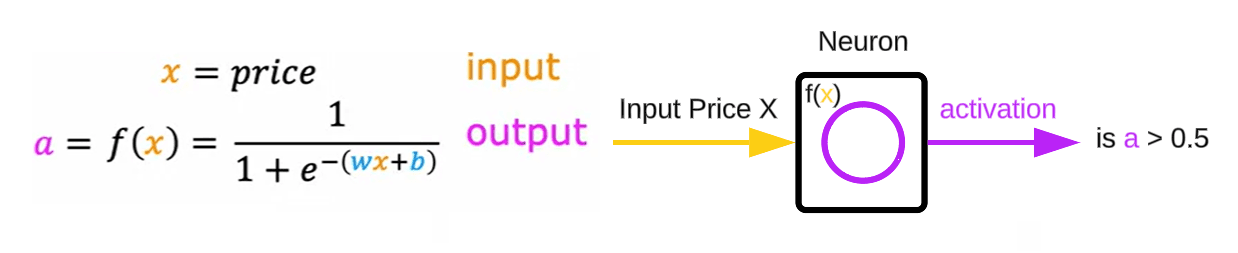

Neural Network Prediction Example

Let’s look at a real-world application: product demand prediction for retailers. A retailer wants to predict whether a product will become a bestseller based on its price. The neural network model follows these steps:

Input (X): The price of the product is fed into the model.

Computation: A logistic regression sigmoid function is used to process the input.

Activation (a): The function outputs a probability, indicating the likelihood of the product being a top seller.

The output of the sigmoid function is called activation, represented with letter a.

Activation is a term from neuroscience that refers to how much a neuron is sending a high output to other neurons downstream from it

This model can be represented a single neuron.

Inputs: Product price.

Mathematical Function: Logistic regression.

Output: Probability that the product will be a bestseller.

The neuron is a single compute unit that takes one or more inputs (price of the product), processes them and performs a calculation using a mathematical function (logistic regression). Finally, it produces an output, also called activation (probability of the product being a top seller)

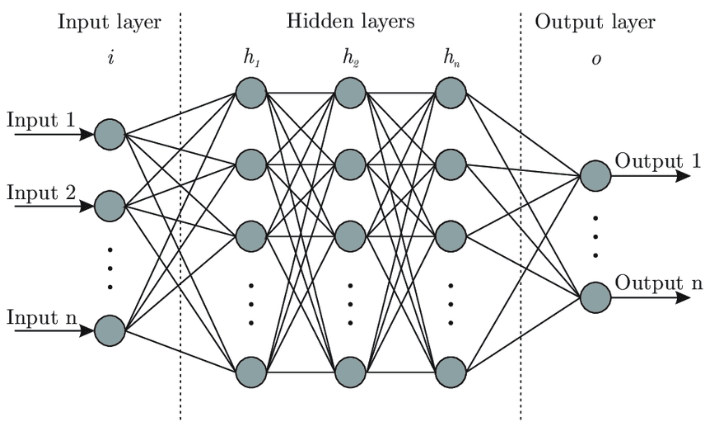

Building a Multi-Layer Neural Network

While a single neuron can handle basic predictions, complex problems require multiple layers of neurons. Building a neural network then is taking a bunch of neurons and grouping them together in layers.

How Neural Networks Are Structured

Input Layer – The input data, also known as vector X, receives the raw data (e.g., an array of numbers).

Hidden layers – Intermediate layers, groups made up of multiple neurons, perform calculations on the input data and send the activation array to the next layer of neurons.

Output Layer – Process repeats until final layer produces a prediction.

Each hidden layer extracts features from the data, making it easier for subsequent layers to learn useful patterns and make accurate predictions.

Key Considerations When Designing a Neural Network

When building a neural network, one of the most important decisions is:

How many layers should the network have?

How many neurons per layer are needed?

What activation functions should be used?

These choices significantly impact the performance of the learning algorithm. We’ll dive deeper into neural network architecture design in a future blog.

Conclusion

Neural networks are powerful tools for solving complex problems, from predicting demand in retail to diagnosing diseases. By understanding their architecture and functionality, you can start building your own models and improving their efficiency.

Stay tuned for the next blog, where we’ll explore how to choose the optimal number of layers and neurons for your network! 🚀

Discussion (0)